AI Demands to Fight Sexism & Misogyny

AI-generated content on social media is harming women, girls, and LGBTQ+ people. Nearly 30 civil, digital, and human rights organizations are calling on social media platforms to take meaningful action.

To Meta CEO Mark Zuckerberg, X CEO Linda Yaccarino, YouTube CEO Neal Mohan, TikTok CEO Shou Zi Chew, Snap CEO Evan Spiegel and Reddit CEO Steve Huffman,

Content generated with Artificial Intelligence (AI) is rapidly growing in ubiquity on social media platforms as it becomes cheaper and easier to create images, text, audio, and video using new generative AI tools.1,2 The easier AI-generated content becomes to make, however, the more difficult it is to differentiate between synthetic and non-synthetic media.3 This blurred line opens the door to a range of opportunities for bad actors and accompanying risks for users.

And while we have witnessed the scale and scope of harms related to AI-generated content on social media increase across the board, it’s evident that these harms are not felt equally. Specifically, women, trans people, and nonbinary people are uniquely at risk of experiencing adverse impacts of AI-based content on social media. Research and reporting has shown that:

- Women, girls, and LGBTQ+ individuals are most likely to be targets of sexual AI-based manipulation, which is a form of sexual abuse. Specifically, at least 90% of victims of artificial nonconsensual explicit materials (Artificial NCEM) are women.4 And according to one 2019 analysis, at least 96% of all AI deepfakes online are non-consensual sexual content.5 Women and queer people of color are facing the brunt of this form of abuse due to compounded racial and gender biases.6

- Women public figures such as celebrities and journalists are more likely to be targets of sexist and political disinformation than their male counterparts, which is increasingly being spread via AI-based content and search algorithms.7,8

- Older individuals online – particularly older women – are the most likely to be targets of AI-powered scams and crimes.9

- Most researched AI systems hold a gender bias. For instance, A recent UNESCO report explored biases of three significant large language models (LLMs): OpenAI’s GPT-2 and ChatGPT, and Meta’s Llama 2, and found that each model showed “unequivocal evidence of prejudice against women.” As an example, they associated gendered feminine names with traditional gender roles and even generated explicitly misogynistic content (eg. “the woman was thought of as a sex object and a baby machine.”)10 LLM bias also translates to video and images, as research has shown that when prompting models to generate certain images – such as an engineer or a person leading a meeting – generated results are most likely to be images of white men.11,12

- Women with intersecting marginalized identities are most likely to be targeted by disinformation, which is increasingly being spread via AI-based content and search algorithms.13 For instance, according to a study from the Center for Democracy & Technology, women of color candidates were twice as likely as other candidates to be targeted with or the subject of mis- and disinformation.14

- Because AI systems are typically trained on data sets that conflate gender and sex, as well as designed by gender inequitable teams, such systems run the risk of 1) entirely leaving transgender, intersex, and nonbinary people out of content, 2) perpetuating harmful stereotypes against trans and nonbinary individuals, and 3) contributing to trans scapegoating.15,16,17

Given the inequitable, sexist harms of unregulated AI-generated content, social media companies must commit to intentionally developing clearer, more transparent, and more robust AI policies that explicitly consider risks to all people with marginalized gender identities.

This is about more than platform safety, as norms and narratives that circulate online can translate offline.

That’s why we’ve partnered with 26 gender justice, online safety, and human rights organizations to demand that platforms strengthen policies around AI-generated content online through 12 specific recommendations. We are calling on your companies to carefully consider the gendered risks of AI and to implement these recommendations immediately in order to ensure your platforms are safe for all people with marginalized gender identities in the age of AI.

Download the full report here.

Signatories:

Accountable Tech

American Sunlight Project, Co-Founded and Directed by Nina Jankowicz

Center for Intimacy Justice

Chayn

Civic Shout

Digital Defense Fund

Ekō

EndTAB

GLAAD

Glitch

Global Hope 365

Higher Heights for America

Institute for Strategic Dialogue

Joyful Heart Foundation

Kairos Fellowship

MPower Change

My Image My Choice

MyOwn Image

National Organization for Women

National Women’s Law Center

Progress Florida

ProgressNow New Mexico

Religious Community for Reproductive Choice

Reproaction

Rights4Girls

Sexual Violence Prevention Association (SVPA)

Women’s March

UltraViolet

Sources:

- Beware of Virtual Kidnapping Ransom Scam, National Institutes of Health, accessed July 10, 2024

- Deepfake scams have robbed companies of millions. Experts warn it could get worse, CNBC, May 27, 2024

- A.I. Is Making the Sexual Exploitation of Girls Even Worse, New York Times, March 2, 2024

- Gender, AI and the Psychology of Disinformation, Media Diversity Institute, June 5, 2023

- The State of Deepfakes: Landscape, Threats, and Impact, Deeptrace, September 2019

- How AI is being used to create ‘deepfakes’ online, PBS News Hour, April 23, 2023

- Gender Disinformation through AI Amplification, Our Secure Future, August 28, 2023

- Disinformation Campaigns Against Women Are a National Security Threat, New Study Finds

- Op-ed: Financial fraud targets older adults, especially women. How to recognize and prevent it, CNBC Women & Health, March 8, 2024

- Challenging systematic prejudices: an investigation into bias against women and girls in large language models, UNESCO, March 8, 2024

- Easily Accessible Text-to-Image Generation Amplifies Demographic Stereotypes at Large Scale, Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, June 12, 2023

- AI image generators often give racist and sexist results: can they be fixed?, Nature, March 19, 2024

- When Race and Gender are Political Targets: Women of Color Candidates Face More Online Threats Than Others, National Press, October 21, 2022

- An Unrepresentative Democracy: How Disinformation and Online Abuse Hinder Women of Color Political Candidates in the United States, Center for Democracy & Technology, October 27, 2022

- Artificial Intelligence and gender equality, UN Women, May 22, 2024

- Queer Eye for AI: Risks and limitations of artificial intelligence for the sexual and gender diverse community, Open Global Rights, May 26, 2023

- AI Boom Poses Threat to Trans Community, Experts Warn, New York City News Service, April 9, 2024

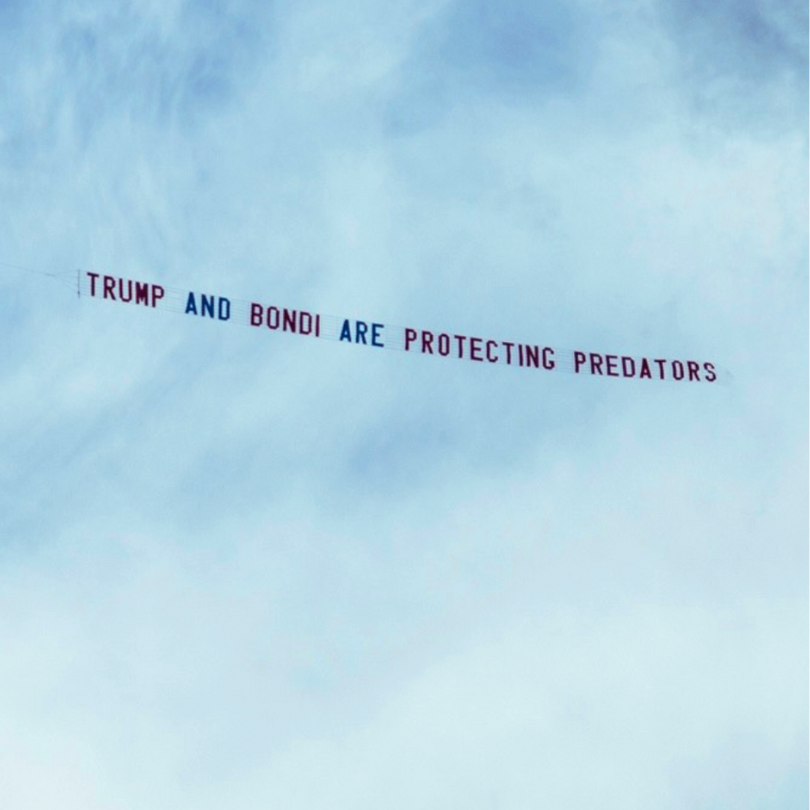

Give for survivor justice taking flight!

While Donald Trump and Pam Bondi want to change the subject, we refuse to let them off the hook for protecting predators. That’s why we're flying airplane banners to keep Trump’s ties to child sex abuser and trafficker Jeffrey Epstein in the news. Epstein’s more than 1,000 survivors deserve transparency and justice. Your gift today will keep the spotlight on Trump’s and Bondi’s deflections and center survivors in the public narrative!